Dropout¶

Description¶

This module performs the dropout operation.

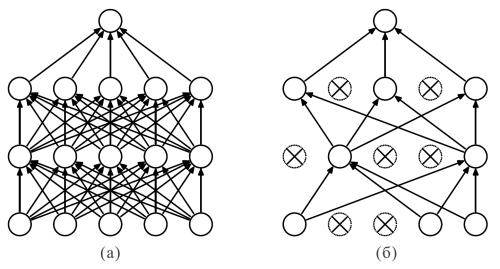

The dropout technique is used to reduce the probability of overfitting a model. The essence of the method is that in the learning process, the dropout layer randomly nullifies a certain number of the input tensor elements. Figure 1.b schematically shows the operating principle of the module (dropout is applied to each layer).

Moreover, when using the dropout technique, the output of each remaining neuron is scaled \frac{1}{1 - p} times where p - probability of excluding neurons.

In test (inference) mode, the dropout mechanism is disabled.

Additional sources¶

Initializing¶

def __init__(self, p=0.5, rng=globalRng, slicing=None, inplace=False, name=None):

Parameters

| Parameter | Allowed types | Description | Default |

|---|---|---|---|

| p | float | Probability of excluding neurons | 0.5 |

| rng | object | Random number generator object (backend-dependent) | globalRng |

| slicing | slice | Slice, to which the operation is applied | None |

| inplace | bool | If True, the output tensor will be written in memory in the place of the input tensor | False |

| name | str | Layer name | None |

Explanations

rng - random number generator, which depends on the platform where the calculations are performed, i.e. the generator is backend-dependent: for example, when using Nvidia-GPU and CUDA, the generator is formed using the curand library;

slicing - slice is set through the Python built-in slice object. Please have in mind that the slice indexes are set for the flattened data tensor;

inplace - flag showing whether additional memory resources should be allocated for the result. If True, then the output tensor will be written in the place of the input tensor in memory, which can negatively affect the network, if the input tensor takes part in calculations on other branches of the graph.

Examples¶

Necessary imports.

import numpy as np

from PuzzleLib.Backend import gpuarray

from PuzzleLib.Modules import Dropout

Info

gpuarray required to properly place the tensor in the GPU.

Let us generate the data tensor. For simplicity, the number of batches and maps will be equal to 1:

batchsize, maps, h, w = 1, 1, 5, 5

data = gpuarray.to_gpu(np.arange(batchsize * maps * h * w).reshape((batchsize, maps, h, w)).astype(np.float32))

print(data)

[[[[ 0. 1. 2. 3. 4.]

[ 5. 6. 7. 8. 9.]

[10. 11. 12. 13. 14.]

[15. 16. 17. 18. 19.]

[20. 21. 22. 23. 24.]]]]

Let us initialize the module with default values:

dropout = Dropout()

print(dropout(data))

[[[[ 0. 0. 4. 6. 8.]

[ 0. 12. 14. 0. 0.]

[ 0. 0. 24. 26. 28.]

[30. 32. 0. 36. 38.]

[40. 0. 0. 46. 0.]]]]

Now let us set the slicing parameter (note that we set the slice for the flattened version of the data tensor):

dropout = Dropout(slicing=slice((h * w) // 2, h * w))

print(dropout(data))

[[[[ 0. 1. 2. 3. 4.]

[ 5. 6. 7. 8. 9.]

[10. 11. 24. 26. 0.]

[30. 0. 34. 0. 38.]

[ 0. 42. 0. 46. 48.]]]]